TikTok has announced it is introducing a strike-based system for users whose videos repeatedly violate the company’s guidelines.

The social media giant announced the changes on 9 July.

No ad to show here.

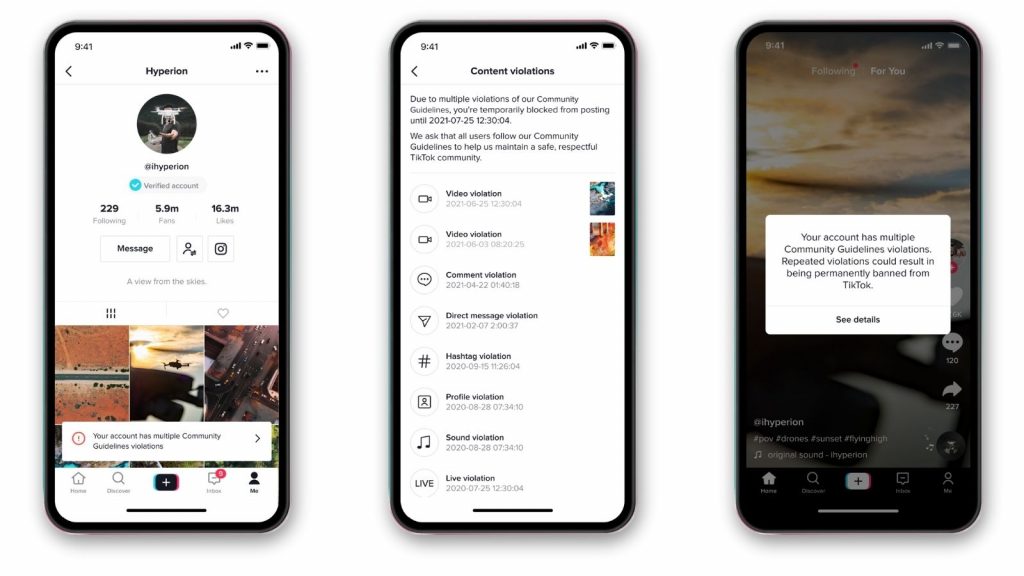

Going forward, users who repeatedly post videos that violate the guidelines will be notified and potentially lose access to their accounts.

“The new system counts the violations accrued by a user and is based on the severity and frequency of the violation(s),” Head of US Safety, Eric Han, said in a statement.

How the TikTok strike system and banning work

TikTok users who break the rules will be notified in the Account Updates section of their inbox. They can also check how many violations they’ve accumulated.

For the first violation, users will receive a warning in the app. If the violation concerns the company’s zero-tolerance policy, it will result in an automatic ban.

After the first violation, users cannot upload videos, comment, or edit their profiles for up to 48 hours.

Alternatively, TikTok will change their account to view-only for up to a week. This means that for the duration, they cannot post content or engage with others.

If a user commits several violations, the app will tell them they are on the verge of a permanent ban. That will then happen if they persist.

Meanwhile, TikTok will start using technology to automatically remove content from the app.

“Automation will be reserved for content categories where our technology has the highest degree of accuracy, starting with violations of our policies on minor safety, adult nudity and sexual activities, violent and graphic content, and illegal activities and regulated goods,” Han explained.

If the system finds a video that violates the app’s policies, it will remove it and notify the user.

Featured image: TikTok

Read more: TikTok removes millions of suspected underage accounts