Social media was abuzz this week with news that the France landmark, the Eiffel Tower was on fire.

While the news appeared concerning, with a social media post reaching over 4.2 million likes for the imagery, the news of the Eiffel Tower burning was later found to be a hoax.

No ad to show here.

This caused a stir on social media especially since footage showed the Eiffel Tower engulfed in flames.

Using AI-generated imagery, a social media user created the illusion of the Eiffel Tower in flames, caused panic but appears to have gained notoriety, when we look at the amount of likes they walked away with.

The danger

What happens to someone who causes such panic, and how far is too far?

While there’s no confirmation of damage to the Eiffel Tower, from French police, one can imagine the amount of calls they fielded all as a result of a user, elaborating how effective AI has become.

The danger lies in the ability of AI to generate realistic and believable images, which carries significant risks.

Some reasons for panic include:

Misinformation and the spreading of fake news. Malicious actors could use AI to create fake images or videos of politicians, celebrities, or ordinary individuals engaging in misleading activities. AI can also lead to confusion, confusion and worse, social unrest.

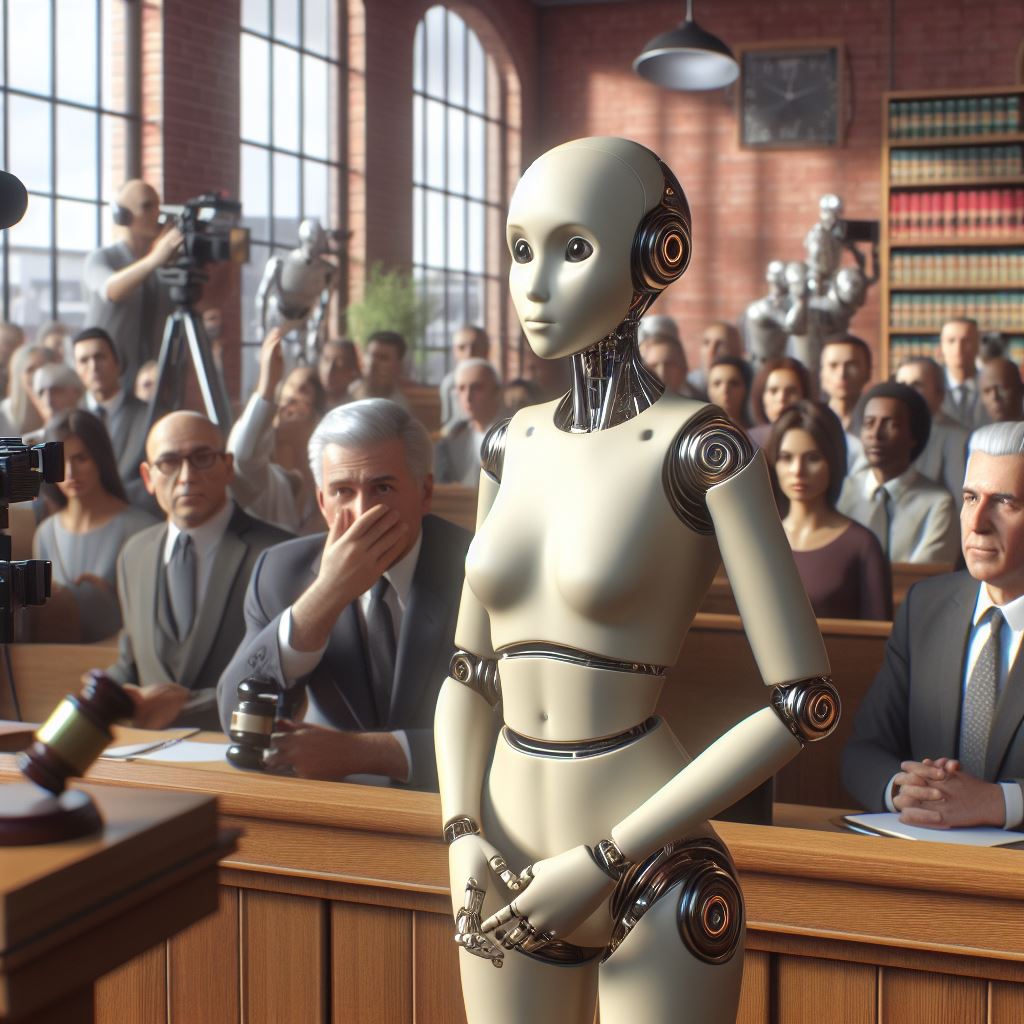

Ai images could be used to create false evidence in legal cases or to support conspiracies, potentially impacting judicial proceedings and public opinion.

Worse

Civil unrest, and emotional manipulation

AI could be used to generate highly realistic and graphic images of violence, gore, or other disturbing content. This could be emotionally traumatizing for viewers and could even trigger panic attacks or anxiety in vulnerable individuals.

Fake or fabricated disasters could lead to unnecessary panic and fear among the public.

From identity theft, all the way to impersonating individuals online through video calls, the amount and reach of AI remain a wonder, and if left unattended for too long may become a monster we never want to exist uncontrolled.

There lies a potential for AI to cause panic and this highlights an urgent need for responsible development and regulation of the tech.

What does this look like?

Developers may need to be transparent about how their tools work, including how they could be misused.

We need better systems to detect and remove harmful and misleading AI-generated content before it spreads online. This is currently the issue.

It’s also important to educate the public about the capability and limitations of AI in order to aid in users being critically equipped to flag AI-generated content.

AI imagery should be used for good, and never to harm, maybe this should be the tagline for that AI top cop with a plan to punish the wicked.

If safeguards are implemented to promote the ethical use of AI, we can ensure that the tech benefits society as opposed to spreading panic over the Eiffel Tower burning.

Marcus Gopolang MolokoAlso read: Alarming questions around pass rate for class of 2023