Donald Trump’s call for Netflix to remove board member Susan Rice has intensified the Paramount saga, pushing the streaming wars into a political confrontation.

Facebook chimes on about harmful content with a renewed ‘remove, reduce, inform’ strategy

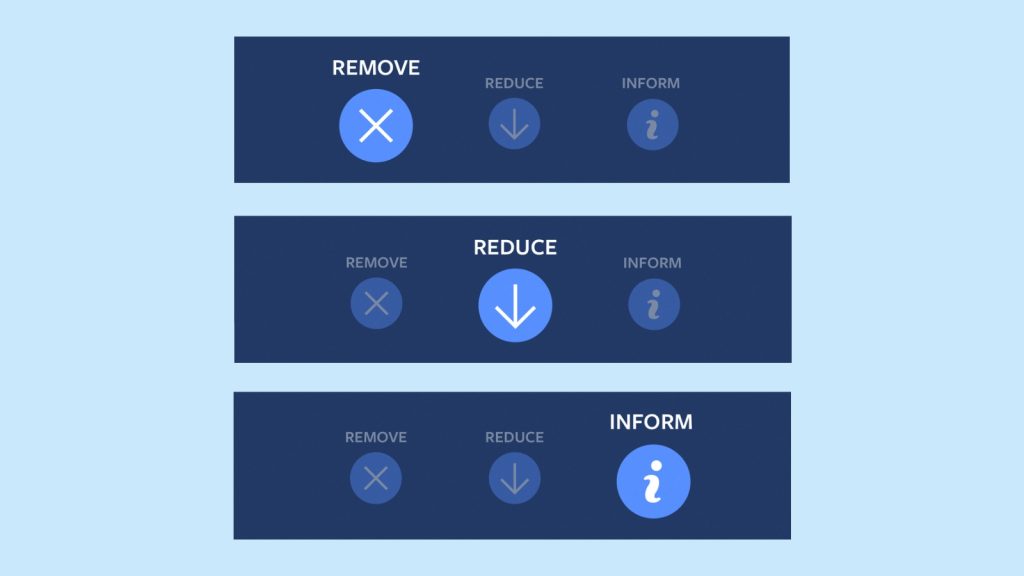

Facebook announced on Wednesday that it is updating its “remove, reduce, inform” strategy to further prevent harmful content from appearing on its platform.

“This involves removing content that violates our policies, reducing the spread of problematic content that does not violate our policies and informing people with additional information,” the company explained on its blog.

Remove

The updated version of Facebook’s “remove” strategy will now include a new section on its Community Standards site. This will give users a space to view the company’s reasons for making updates and policy changes.

Facebook will also implement a new Group Quality feature, that will show users all the offending and violating content Groups have been flagged for.

The latter will certainly help people make more informed decisions when joining groups on the platform.

Reduce

In order to reduce harmful content, Facebook explains that it is expanding access for third-party fact checkers to review content. The company is also reducing the reach of groups that constantly post false information, and adding a new Click-Gap indicator to News Feed.

“Click-Gap looks for domains with a disproportionate number of outbound Facebook clicks compared to their place in the web graph,” the company explained.

This feature will help people spot content with inauthentic popularity on Facebook by allowing them to see their reach outside of the platform.

Instagram will also stop featuring inappropriate posts in hashtags and Explore, though the content will still be seen by followers.

Inform

Facebook is launching a host of new features in News Feed that will help inform its users about content authenticity.

The Context Button, which tells users more about articles they see on Facebook, will now appear on pictures as well. This will give users an opportunity to find out more information about images they see in News Feed.

In addition to this, a new trust indicator will also appear on the Context Button, to let people know if what they are reading comes from a reputable source.

“The indicators we display in the context button cover the publication’s fact-checking practices, ethics statements, corrections, ownership and funding and editorial team,” Facebook said.

In Messenger, the company is combating impostors by including verified badges on well known accounts.

“This tool will help people avoid scammers that pretend to be high-profile people by providing a visible indicator of a verified account,” the company noted.

Though these are just some of the many updates Facebook is making, the company certainly seems determined to minimise unsavoury content on its many platforms.

Feature image: Facebook