Anthropic says its AI will not be used to spy on customers, even in government contracts. Here is what that means for AI governance, enterprise trust and defence partnerships.

Leap Motion now tracking individual finger bones for crazy 3D accuracy

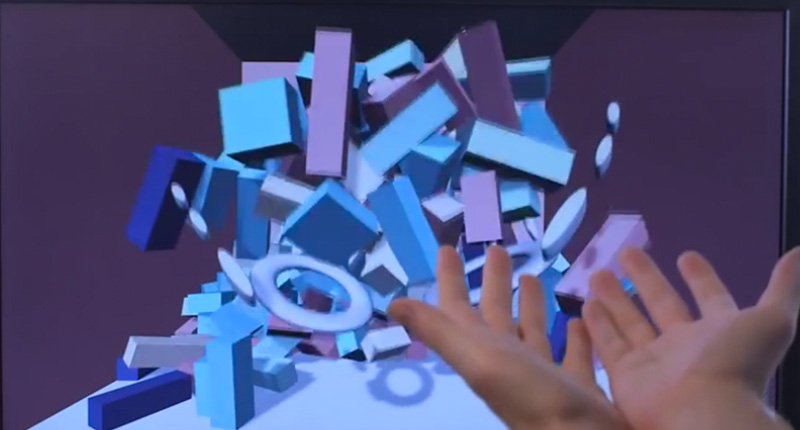

The gimmicky, if not cool Leap Motion tracker takes a giant leap forward as version 2 of its software releases into the public sphere. Over on the company’s official blog, Leap CEO Michael Buckwald announced the next generation of his motion tracking software, which now enters beta for developers. While Leap V1 tracked fingers, palms and wrist movement, V2 steps it up with individual bone and joint tracking within the fingers. The public beta is active, so an update to the software will see developers enjoying the new tools to play around with.

For those who want to be impressed, watch the video below which demonstrates Leap Motion’s V2 software in action.

We reviewed Leap Motion last year when it released and found it to be a fun, yet unessential product. We’ll be eating humble pie when we get our hands on V2. The changes alone (based on the video demonstration) makes Leap Motion look like a viable 3D interface.

Leap’s added “grab and pinch” API’s to complement the new granular data capturing for hands and fingers. Granular movement means that even the tiniest gestures can now be recorded and implemented by Leaps hardware. Resistance to ambient infra-red light such as sunlight has also been “massively improved”, so there’ll be less interference with the 3D motion detection. Joint detection is also propelled forward with occlusion robustness, meaning that the last-known position of fingers are tracked when unseen by the controller. Finally, and most importantly, Leap Motion V2 includes “hand labels” so it’s not just finger 1, thumb 2, etc. It’s now pinky, right hand and proximal phalanges (the knuckles).

It’s all fun and games for the developers, who’ll now have access to tools which can bring bring a tangible aspect to a once intangible world. Buckwald explains his vision:

This means taking things like sculpting a lump of clay, snapping together building blocks, or learning to play an instrument – the types of actions 99% of people just won’t or can’t do on a computer with traditional input devices – and making them possible and instantly accessible to anyone who knows how to do them with their physical hands in the real world.

V2 tracking is a critical step forward on this path: it makes it much easier for developers to build transformative applications that are consistent with this vision. This is the first of several major updates we’ll be pushing prior to the V2 consumer release to make it easier for developers to build great content.

The new tracking is the result of over a year of bleeding-edge research by our team and is a major step forward for the platform. However, there is still a great deal of work to be done – this is beta software and there are many more features that need to be added before the consumer launch, with many tracking enhancements in the pipeline – and we appreciate the developer community’s feedback as we continue to iterate and improve on it over the coming months.